Evolution

Evolution

Intelligent Design

Intelligent Design

Conservation of Information — The Theorems

I am reviewing Jason Rosenhouse’s new book, The Failures of Mathematical Anti-Evolutionism (Cambridge University Press), serially. For the full series so far, go here.

Until about 2007, conservation of information functioned more like a forensic tool for discovering and analyzing surreptitious insertions of information: So and so says they got information for nothing. Let’s see what they actually did. Oh yeah, here’s where they snuck in the information. Around 2007, however, a fundamental shift occurred in my work on conservation of information. Bob Marks and I began to collaborate in earnest, and then two very bright students of his also came on board. Initially we were analyzing some of the artificial life simulations that Jason Rosenhouse mentions in his book, as well as some other simulations (such as Thomas Schneider’s ev). As noted, we found that the information emerging from these systems was always more than adequately accounted for in terms of the information initially inputted.

Yet around 2007, we started proving theorems that precisely tracked the information in these systems, laying out their information costs, in exact quantitative terms, and showing that the information problem always became quantitatively no better, and often worse, the further one backtracked causally to explain it. Conservation of information therefore doesn’t so much say that information is conserved as that at best it could be conserved and that the amount of information to be accounted for, when causally backtracked, may actually increase. This is in stark contrast to Darwinism, which attempts to explain complexity from simplicity rather than from equal or greater complexity. Essentially, then, conservation of information theorems argue for an information regress. This regress could then be interpreted in one of two ways: (1) the information was always there, front-loaded from the beginning; or (2) the information was put in, exogenously, by an intelligence.

An Article of Faith

Rosenhouse feels the force of the first option. True, he dismisses conservation of information theorems as in the end “merely asking why the universe is as it is.” (p. 217) But when discussing artificial life, he admits, in line with the conservation of information theorems, that crucial information is not just in the algorithm but also in the environment. (p. 214) Yet if the crucial information for biological evolution (as opposed to artificial life evolution) is built into the environment, where exactly is it and how exactly is it structured? It does no good to say, as Rosenhouse does, that “natural selection serves as a conduit for transmitting environmental information into the genomes of organisms.” (p. 215) That’s simply an article of faith. Templeton Prize winner Holmes Rolston, who is not an ID guy, rejects this view outright. Writing on the genesis of information in his book Genes, Genesis, and God (pp. 352–353), he responded to the view that the information was always there:

The information (in DNA) is interlocked with an information producer-processor (the organism) that can transcribe, incarnate, metabolize, and reproduce it. All such information once upon a time did not exist but came into place; this is the locus of creativity. Nevertheless, on Earth, there is this result during evolutionary history. The result involves significant achievements in cybernetic creativity, essentially incremental gains in information that have been conserved and elaborated over evolutionary history. The know-how, so to speak, to make salt is already in the sodium and chlorine, but the know-how to make hemoglobin molecules and lemurs is not secretly coded in the carbon, hydrogen, and nitrogen….

So no, the information was not always there. And no, Darwinian evolution cannot, according to the conservation of information theorems, create information from scratch. The way out of this predicament for Darwinists (and I’ve seen this move repeatedly from them) is to say that conservation of information may characterize computer simulations of evolution, but that real-life evolution has some features not captured by the simulations. But if so, how can real-life evolution be subject to scientific theory if it resists all attempts to model it as a search? Conservation of information theorems are perfectly general, covering all search.

Push Comes to Shove

Yet ironically, Rosenhouse is in no position to take this way out because, as noted in my last post in this series, he sees these computer programs as “not so much simulations of evolution [but as] instances of it.” (p. 209) Nonetheless, when push comes to shove, Rosenhouse has no choice, even at the cost of inconsistency, but to double down on natural selection as the key to creating biological information. The conservation of information theorems, however, show that natural selection, if it’s going to have any scientific basis, merely siphons from existing sources of information, and thus cannot ultimately explain it.

As with specified complexity, in proving conservation of information theorems, we have taken a largely pre-theoretic notion and turned it into a full-fledged theoretic notion. In the idiom of Rosenhouse, we have moved the concept from track 1 to track 2. A reasonably extensive technical literature on conservation of information theorems now exists. Here are three seminal peer-reviewed articles addressing these theorems on which I’ve collaborated (for more, go here):

- William A. Dembski and Robert J. Marks II “Conservation of Information in Search: Measuring the Cost of Success,” IEEE Transactions on Systems, Man and Cybernetics A, Systems & Humans, vol.5, #5, September 2009, pp.1051-1061

- William A. Dembski and Robert J. Marks II, “The Search for a Search: Measuring the Information Cost of Higher Level Search,” Journal of Advanced Computational Intelligence and Intelligent Informatics, Vol.14, No.5, 2010, pp. 475-486.

- William A. Dembski, Winston Ewert and Robert J. Marks II, “A General Theory of Information Cost Incurred by Successful Search,” Biological Information (Singapore: World Scientific, 2013), pp. 26-63.

A Conspiracy of Silence

Rosenhouse cites none of this literature. In this regard, he follows Wikipedia, whose subentry on conservation of information likewise fails to cite any of this literature. The most recent reference in that Wikipedia subentry is to a 2002 essay by Erik Tellgren, in which he claims that my work on conservation of information is “mathematically unsubstantiated.” That was well before any of the above theorems were ever proved. That’s like writing in the 1940s, when DNA’s role in heredity was unclear, that its role in heredity was “biologically unsubstantiated,” and leaving that statement in place even after the structure of DNA (by 1953) and the genetic code (by 1961) had been elucidated. It’s been two decades since Tellgren made this statement, and it remains in Wikipedia as the authoritative smackdown of conservation of information.

At least it can be said of Rosenhouse’s criticism of conservation of information that it is more up to date than Wikipedia’s account of it. But Rosenhouse leaves the key literature in this area uncited and unexplained (and if he did cite it, I expect he would misexplain it). Proponents of intelligent design have grown accustomed to this conspiracy of silence, where anything that rigorously undermines Darwinism is firmly ignored (much like our contemporary media is selective in its reporting, focusing exclusively on the party line and sidestepping anything that doesn’t fit the desired narrative). Indeed, I challenge readers of this review to try to get the three above references inserted into this Wikipedia subentry. Good luck getting past the biased editors who control all Wikipedia entries related to intelligent design.

So, what is a conservation of information theorem? Readers of Rosenhouse’s book learn that such theorems exist. But Rosenhouse neither states nor summarizes these theorems. The only relevant theorems he recaps are the no free lunch theorems, which show that no algorithm outperforms any other algorithm when suitably averaged across various types of fitness landscapes. But conservation of information theorems are not no free lunch theorems. Conservation of information picks up where no free lunch leaves off. No free lunch says there’s no universally superior search algorithm. Thus, to the degree a search does well at some tasks, it does poorly at others. No free lunch in effect states that every search involves a zero-sum tradeoff. Conservation of information, by contrast, starts by admitting that for particular searches, some do better than others, and then asks what allows one search to do better than another. It answers that question in terms of active information. Conservation of information theorems characterize active information.

A Bogus Notion?

To read Rosenhouse, you would think that active information is a bogus notion. But in fact, active information is a useful concept that all of us understand intuitively, even if we haven’t put a name to it. It arises in search. Search is a very general concept, and it encompasses evolution (Rosenhouse, recall, even characterized evolution in terms of “searching protein space”). Most interesting searches are needle-in-the-haystack problems. What this means is that there’s a baseline search that could in principle find the needle (e.g., exhaustive search or uniform random sampling), but that would be highly unlikely to find the needle in any realistic amount of time. What you need, then, is a better search, one that can find the needle with a higher probability so that it is likely, with the time and resources on hand, to actually find the needle.

We all recognize active information. You’re on a large field. You know an easter egg is hidden somewhere in it. Your baseline search is hopeless — you stand no realistic chance of finding the Easter egg. But now someone tells you warm, cold, warm, warmer, hot, you’re burning up. That’s a better search, and it’s better because you are being given better information. Active information measures the amount of information that needs to be expended to improve on a baseline search to make it a better search. In this example, note that there are many possible directions that Easter egg hunters might receive in order to try to find the egg. Most such directions will not lead to finding the egg. Accordingly, if finding the egg is finding a needle in a haystack, so is finding the right directions among the different possible directions. Active information measures the information cost of finding the right directions.

Treasure Island

In the same vein, consider a search for treasure on an island. If the island is large and the treasure is well hidden, the baseline search may be hopeless — way too improbable to stand a reasonable chance of finding the treasure. But suppose you now get a treasure map where X marks the spot of the treasure. You’ve now got a better search. What was the informational cost of procuring that better search? Well, it involved sorting through all possible maps of the island and finding one that would identify the treasure location. But for every map where X marks the right spot, there are many where X marks the wrong spot. According to conservation of information, finding the right map faces an improbability no less, and possibly greater, than finding the treasure via the baseline search. Active information measures the relevant (im)probability

We’ve seen active information before in the Dawkins Weasel example. The baseline search for METHINKS IT IS LIKE A WEASEL stands no hope of success. It requires a completely random set of keystrokes typing all the right letters and spaces of this phrase without error in one fell swoop. But given a fitness function that assigns higher fitness to phrases where letters match the target phrase METHINKS IT IS LIKE A WEASEL, we’ve now got a better search, one that will converge to the target phrase quickly and with high probability. Most fitness functions, however, don’t take you anywhere near this target phrase. So how did Dawkins find the right fitness function to evolve to the target phrase? For that, he needed active information.

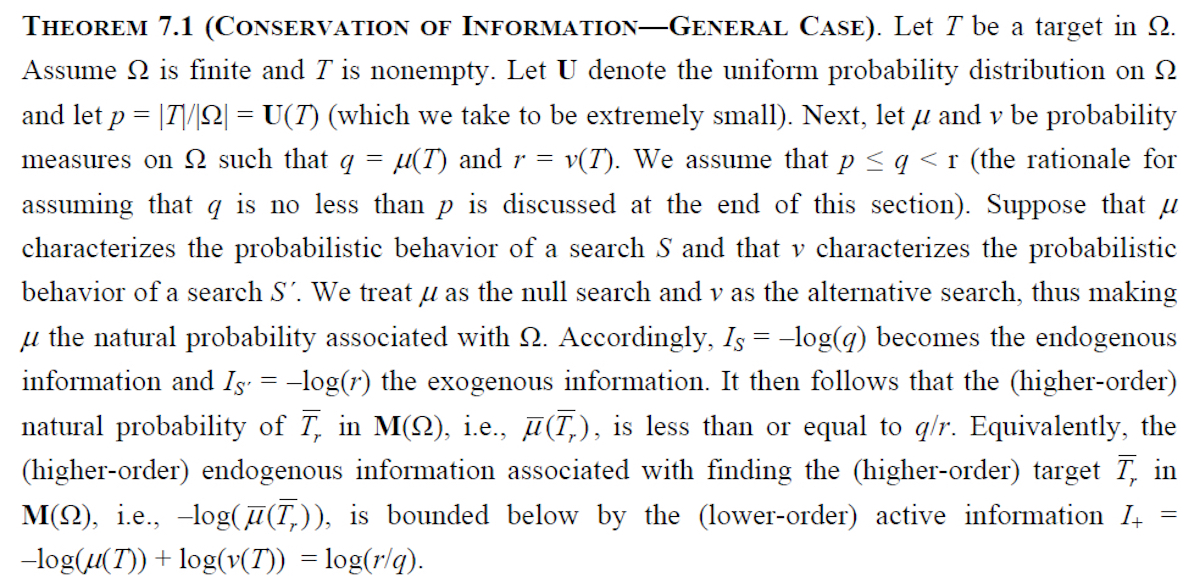

My colleagues and I have proved several conservation of information theorems, which come in different forms depending on the type and structure of information needed to render a search successful. Here’s the most important conservation of information theorem proved to date. It appears in the third article cited above (i.e., “A General Theory of Information Cost Incurred by Successful Search”):

Wrapping Up the Discussion

Even though the statement of this theorem is notation-heavy and will appear opaque to most readers, I give it nonetheless because, as unreadable as it may seem, it exhibits certain features that can be widely appreciated, thereby helping to wrap up this discussion of conservation of information, especially as it relates to Rosenhouse’s critique of the concept. Consider therefore the following three points:

- The first thing to see in this theorem is that it is an actual mathematical theorem. It rises to Rosenhouse’s track 2. A peer-reviewed literature now surrounds the work. The theorem depends on advanced probability theory, measure theory, and functional analysis. The proof requires vector-valued integration. This is graduate-level real analysis. Rosenhouse does algebraic graph theory, so this is not his field, and he gives no indication of actually understanding these theorems. For him to forgo providing even the merest sketch of the mathematics underlying this work because “it would not further our agenda to do so” (p. 212–213) and for him to dismiss these theorems as “trivial musings” (p. 269) betrays an inability to grapple with the math and understand its implications, as much as it betrays his agenda to deep-six conservation of information irrespective of its merits.

- The Greek letter mu denotes a null search and the Greek letter nu an alternative search. These are respectively the baseline search and the better search described earlier. Active information here, measured as log(r/q), measures the information required in a successful search for a search (usually abbreviated S4S), which is the information to find nu to replace mu. Searches can themselves be subject to search, and it’s these higher level searches that are at the heart of the conservation of information theorems. Another thing to note about mu and nu is that they don’t prejudice the types of searches or the probabilities that represent them. Mu and nu are represented as probability measures. But they can be any probability measures that assign at least as much probability to the target T as uniform probability (the assumption being that any search can at least match the performance of a uniform probability search — this seems totally reasonable). What this means is that conservation of information is not tied to uniform probability or equiprobability. Rosenhouse, by contrast, claims that all mathematical intelligent design arguments follow what he calls the Basic Argument from Improbability, which he abbreviates BAI (p. 126). BAI attributes to design proponents the most simple-minded assignment of probabilities (namely uniform probability or equiprobability). Conservation of information, like specified complexity, by contrast, attempts to come to terms with the probabilities as they actually are. This theorem, in its very statement, shows that it does not fall under Rosenhouse’s BAI.

- The search space Omega (Ω) in this example is finite. Its finiteness, however, in no way undercuts the generality of this theorem. All scientific work, insofar as it measures and gauges physical reality, will use finite numbers and finite spaces. The mathematical models used may involve infinities, but these can in practice always be approximated finitely. This means that these models belong to combinatorics. Rosenhouse, throughout his book, makes off that combinatorics is a dirty word, and that intelligent design, insofar as it looks to combinatorics, is focused on simplistic finite models and limits itself to uniform or equiprobabilities. But this is nonsense. Any object, mathematical or physical, consisting of finitely many parts related to each other in finitely many ways is a combinatorial object. Moreover, combinatorial objects don’t care what probability distributions are placed on them. Protein machines are combinatorial objects. Computer programs (and these include the artificial life simulations with which Rosenhouse is infatuated) are combinatorial objects. The bottom line is that it is no criticism at all of intelligent design to say that it makes extensive use of combinatorics.

Next, “Closing Thoughts on Jason Rosenhouse.”

Editor’s note: This review is cross-posted with permission of the author from BillDembski.com.