Culture & Ethics

Culture & Ethics

Neuroscience & Mind

Neuroscience & Mind

Deep Fakes and Propaganda for Artificial General Intelligence

Deep fakes are an exciting and disturbing application of artificial intelligence. With deep fakes, AI deep learning algorithms are used to create or manipulate audio and video recordings with a high degree of realism. The term “deep fake” is a portmanteau of “deep learning” and “fake,” highlighting the use of deep neural networks to generate convincingly realistic fake content. These AI models are trained on vast datasets of real images, videos, and voice recordings to learn how to replicate human appearances, expressions, and voices with startling accuracy.

The creation of deep fakes requires two complementary AI systems: one that generates the fake images or videos (the generator) and another that attempts to detect the fakes (the discriminator). Through generative adversarial networks (GANs), these systems work in opposition to each other, with the generator trying to get the discriminator to pass on its fakes, and with the discriminator trying to unmask the generators’ fakes. This interplay between generator and discriminator continually improves the quality of the generated fakes until, ideally, they are indistinguishable to the human eye or ear from authentic content.

Benign and Malicious Uses

Deep fakes can have benign and malicious uses. On the positive side, they can be used in the entertainment industry to de-age actors, dub languages with lip-sync accuracy, or bring historical figures to life in educational settings. However, the technology also poses significant ethical and societal risks. It can be used to create fake news, manipulate public opinion, impersonate people, fabricate evidence, violate personal privacy, and even make it seem as though someone has been kidnapped. Deep fakes threaten widespread harm to individuals and society.

The rise of deep fakes challenges traditional notions of trust and authenticity in the digital world. As these AI-generated fakes become more sophisticated, distinguishing between real and fake content can become increasingly difficult for both individuals and automated systems, raising profound challenges to information integrity, security, and democracy. Consequently, a growing need exists for advanced detection techniques, legal frameworks, and ethical guidelines to counter the risks associated with deep fake technology.

Inflating AI’s True Record of Achievement

Deep fakes raise an interesting problem for AI and AI’s relation to artificial general intelligence (AGI) — which, as an idol for destruction, was the subject of my recent series at Evolution News. It would be one thing if artificial intelligence develops over time so powerfully that eventually it turns into artificial general intelligence (though this prospect is a pipe dream if my previous arguments hold). But what if instead AI is used to make it seem that AGI has been achieved — or is on the cusp of being achieved? This would be like a deceptive research scientist who claims to have made experimental discoveries worthy of a Nobel prize, only to be shown later to have fabricated the results, with the discoveries being bogus and all the research papers touting them needing to be retracted. We’ve witnessed this sort of thing in the academic world (see the case of J. Hendrik Schön in my piece “Academic Steroids: Plagiarism and Data Falsification as Performance Enhancers in Higher Education“).

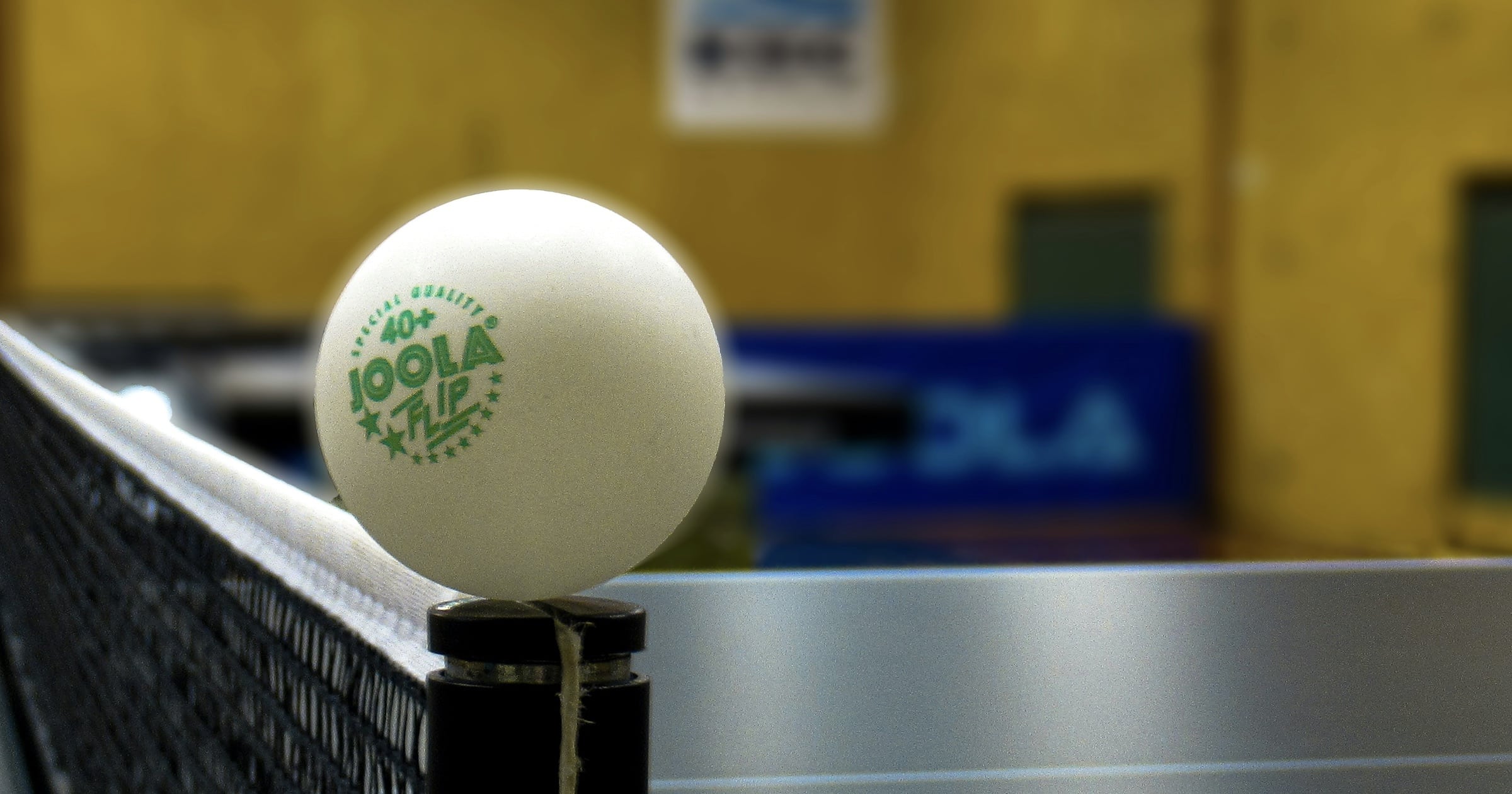

But could such fabrications also arise in making AI seem more powerful than it actually is? Consider the following YouTube video that has garnered over 100 million views:

The video shows a supposed table tennis match between a robot and a top human player. Yet the video is not of an actual match. Instead, it is an exercise in computer-generated imagery (CGI). Such videos are elaborately produced, requiring a significant amount of post-production work to create the illusion of a competitive game between a robot and a human. These videos attract viewers and even win awards. But as critics point out, they are misleading: the videos are all about after-the-fact image manipulation rather than a genuine demonstration of robotic capabilities in playing table tennis.

Such image manipulation, to make it seem that a robot is matching or exceeding human table-tennis playing abilities, is itself a matter of AI. We therefore have here an apparent AI robot being impersonated by AI-generated imagery. Why do this? Because AI robots are unable to play a decent game of table tennis, but AI-generated imagery is able to make it seem as though AI robots are able to do so.

Venturing a Guess

If I had to venture a guess about the future of AGI as it will exist in the public consciousness, it is that AI will never actually achieve AGI but that AI can nonetheless be deceptively used to make it seem that AGI has been achieved. People may thus come to believe that AGI has been achieved when in fact it has not. Like the Wizard of Oz, humans will always be behind the curtain pulling strings and manipulating outcomes, making what is in fact human intervention appear to be entirely the work of computing machines.

This, then, is the danger and temptation facing AI — not that it will attain AGI but that it will be abused to make it seem that AGI has been attained. The challenge for us will be to keep our wits about us and make sure to look behind the curtain. AGI worshippers love to propagandize for AGI. The best way they can do this is by hijacking conventional artificial intelligence and making it seem to do things that it can’t actually do. Our task as AGI debunkers will be to unmask such subterfuges.

Editor’s note: This article appeared originally at BillDembski.com.