Evolution

Evolution

Intelligent Design

Intelligent Design

Shannon and Kolmogorov Information

The first edition of my book The Design Inference as well as its sequel, No Free Lunch, set the stage for defining a precise information-theoretic measure of specified complexity — which is the subject of this series. There was, however, still more work to be done to clarify the concept. In both these books, specified complexity was treated as a combination of improbability or complexity on the one hand and specification on the other.

As presented back then, it was an oil-and-vinegar combination, with complexity and specification treated as two different types of things exhibiting no clear commonality. Neither book therefore formulated specified complexity as a unified information measure. Still, the key ideas for such a measure were in those earlier books. Here, I review those key information-theoretic ideas. In the next section, I’ll join them into a unified whole.

Let’s Start with Complexity

As noted earlier, there’s a deep connection between probability and complexity. This connection is made clear in Shannon’s theory of information. In this theory, probabilities are converted to bits. To see how this works, consider tossing a coin 100 times, which yields an event of probability 1 in 2^100 (the caret symbol here denotes exponentiation). But that number also corresponds to 100 bits of information since it takes 100 bits to characterize any sequence of 100 coin tosses (think of 1 standing for heads and 0 for tails).

In general, any probability p corresponds to –log(p) bits of information, where the logarithm here and elsewhere in this article is to the base 2 (as needed to convert probabilities to bits). Think of a logarithm as an exponent: it’s the exponent to which you need to raise the base (here always 2) in order to get the number to which the logarithmic function is applied. Thus, for instance, a probability of p = 1/10 corresponds to an information measure of –log(1/10) ≈ 3.322 bits (or equivalently, 2^(–3.322) ≈ 1/10). Such fractional bits allow for a precise correspondence between probability and information measures.

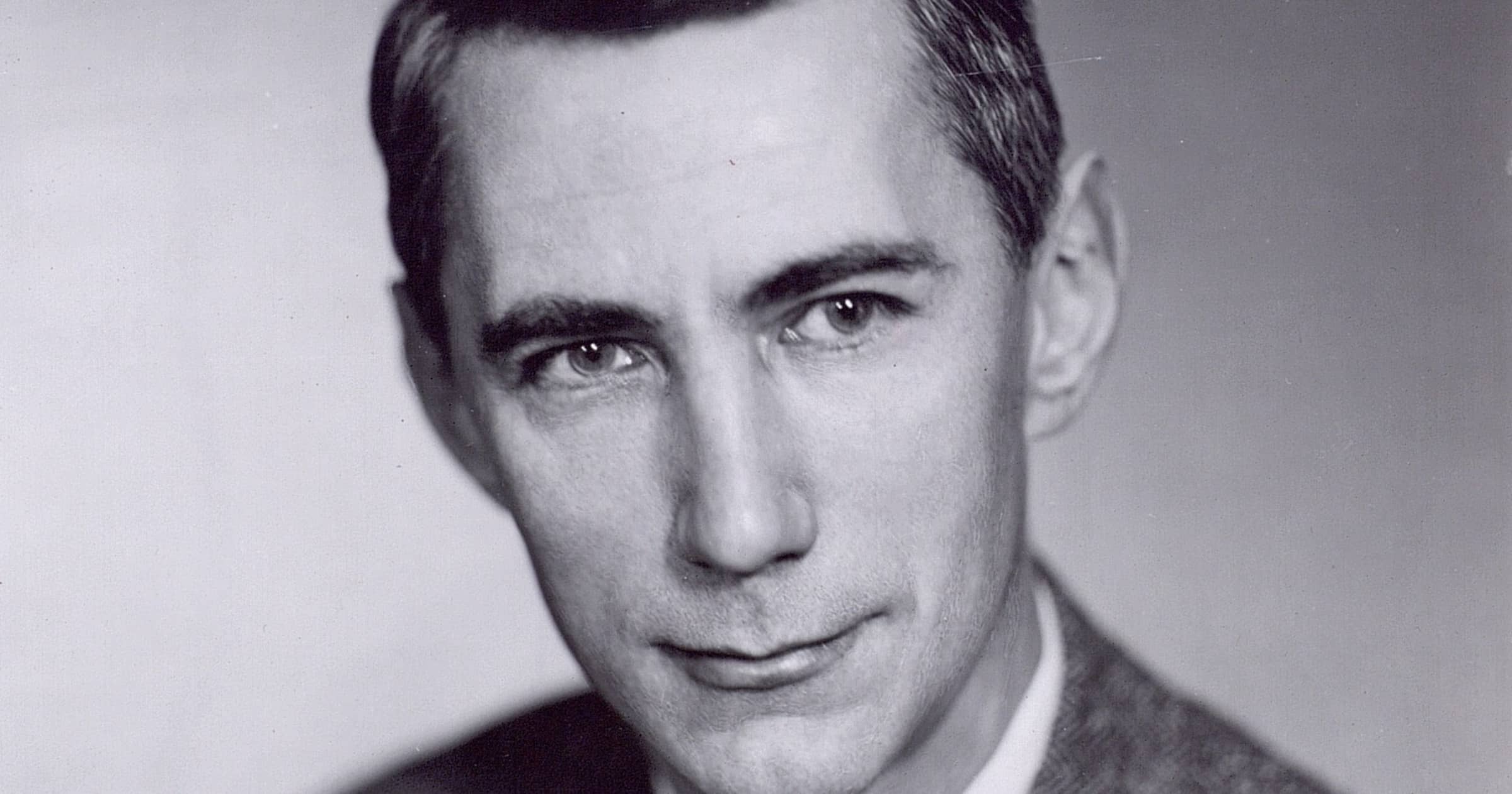

The complexity in specified complexity is therefore Shannon information. Claude Shannon (1916–2001, pictured above) introduced this idea of information in the 1940s to understand signal transmissions (mainly of bits, but also for other character sequences) across communication channels. The longer the sequence of bits transmitted, the greater the information and therefore its complexity.

Because of noise along any communication channel, the greater the complexity of a signal, the greater the chance of its distortion and thus the greater the need for suitable coding and error correction in transmitting the signal. So the complexity of the bit string being transmitted became an important idea within Shannon’s theory.

Shannon’s information measure is readily extended to any event E with a probability P(E). We then define the Shannon information of E as –log(P(E)) = I(E). Note that the minus sign is there to ensure that as the probability of E goes down, the information associated with E goes up. This is as it should be. Information is invariably associated with the narrowing of possibilities. The more those possibilities are narrowed, the more the probabilities associated with those probabilities decrease, but correspondingly the more the information associated with those narrowing possibilities increases.

For instance, consider a sequence of ten tosses of a fair coin and consider two events, E and F. Let E denote the event where the first five of these ten tosses all land heads but where we don’t know the remaining tosses. Let F denote the event where all ten tosses land heads. Clearly, F narrows down the range of possibilities for these ten tosses more than E does. Because E is only based on the first five tosses, its probability is P(E) = 2^(–5) = 1/(2^5) = 1/32. On the other hand, because F is based on all ten tosses, its probability is P(F) = 2^(–10) = 1/(2^10) = 1/1,024. In this case, the Shannon information associated with E and F is respectively I(E) = 5 bits and I(F) = 10 bits.

We Also Need Kolmogorov Complexity

Shannon information, however, is not enough to understand specified complexity. For that, we also need Kolmogorov information, or what is also called Kolmogorov complexity. Andrei Kolmogorov (1903–1987) was the greatest probabilist of the 20th century. In the 1960s he tried to make sense of what it means for a sequence of numbers to be random. To keep things simple, and without loss of generality, we’ll focus on sequences of bits (since any numbers or characters can be represented by combinations of bits). Note that we made the same simplifying assumption for Shannon information.

The problem Kolmogorov faced was that any sequence of bits treated as the result of tossing a fair coin was equally probable. For instance, any sequence of 100 coin tosses would have probability 1/(2^100), or 100 bits of Shannon information. And yet there seemed to Kolmogorov a vast difference between the following two sequences of 100 coin tosses (letting 0 denote tails and 1 denote heads):

0000000000000000000000000

0000000000000000000000000

0000000000000000000000000

0000000000000000000000000

and

1001101111101100100010011

0001010001010010101110001

0101100000101011000100110

1100110100011000000110001

The first just repeats the same coin toss 100 times. It appears anything but random. The second, on the other hand, exhibits no salient pattern and so appears random (I got it just now from an online random bit generator). But what do we mean by random here? Is it that the one sequence is the sort we should expect to see from coin tossing but the other isn’t? But in that case, probabilities tell us nothing about how to distinguish the two sequences because they both have the same small probability of occurring.

Ideas in the Air

Kolmogorov’s brilliant stroke was to understand the randomness of these sequences not probabilistically but computationally. Interestingly, the ideas animating Kolmogorov were in the air at that time in the mid 1960s. Thus, both Ray Solomonoff and Gregory Chaitin (then only a teenager) also came up with the same idea. Perhaps unfairly, Kolmogorov gets the lion’s share of the credit for characterizing randomness computationally. Most information-theory books (see, for instance, Cover and Thomas’s The Elements of Information Theory), in discussing this approach to randomness, will therefore focus on Kolmogorov and put it under what is called Algorithmic Information Theory (AIT).

Briefly, Kolmogorov’s approach to randomness is to say that a sequence of bits is random to the degree that it has no short computer program that generates it. Thus, with the first sequence above, it is non-random since it has a very short program that generates it, such as a program that simply says “repeat ‘0’ 100 times.” On the other hand, there is no short program (so far as we can tell) that generates the second sequence.

It is a combinatorial fact (i.e., a fact about the mathematics of counting or enumerating possibilities) that the vast majority of bit sequences cannot be characterized by any program shorter than the sequence itself. Obviously, any sequence can be characterized by a program that simply incorporates the entire sequence and then simply regurgitates it. But such a program fails to compress the sequence. The non-random sequences, by having programs shorter than the sequences themselves, are thus those that are compressible. The first of the sequences above is compressible. The second, for all we know, isn’t.

Kolmogorov’s information (also known as Kolmogorov complexity) is a computational theory because it focuses on identifying the shortest program that generates a given bit-string. Yet there is an irony here: it is rarely possible to say with certainly that a given bit string is truly random in the sense of having no compressible program. From combinatorics, with its mathematical counting principles, we know that the vast majority of bit sequences must be random in Kolmogorov’s sense. That’s because the number of short programs is very limited and can only generate very few longer sequences. Most longer sequences will require longer programs.

Our Common Experience

But if for an arbitrary bit sequence D we define K(D) as the length of the shortest program that generates D, it turns out that there is no computer program that calculates K(D). Simply put, the function K is non-computable. This fact from theoretical computer science matches up with our common experience that something may seem random for a time, and yet we can never be sure that it is random because we might discover a pattern clearly showing that the thing in fact isn’t random (think of an illusion that looks like a “random” inkblot only to reveal a human face on closer inspection).

Yet even though K is non-computable, in practice it is a useful measure, especially for understanding non-randomness. Because of its non-computability, K doesn’t help us to identify particular non-compressible sequences, these being the random sequences. Even with K as a well-defined mathematical function, we can’t in most cases determine precise values for it. Nevertheless, K does help us with the compressible sequences, in which case we may be able to estimate it even if we can’t exactly calculate it.

What typically happens in such cases is that we find a salient pattern in a sequence, which then enables us to show that it is compressible. To that end, we need a measure of the length of bit sequences as such. Thus, for any bit sequence D, we define |D| as its length (total number of bits). Because any sequence can be defined in terms of itself, |D| forms an upper bound on Kolmogorov complexity. Suppose now that through insight or ingenuity, we find a program that substantially compresses D. The length of that program, call it n, will then be considerably less than |D| — in other words, n < |D|.

Although this program length n will be much shorter than D, it’s typically not possible to show that this program of length n is the very shortest program that generates D. But that’s okay. Given such a program of length n, we know that K(D) cannot be greater than n because K(D) measures the very shortest such program. Thus, by finding some short program of length n, we’ll know that K(D) ≤ n < |D|. In practice, it’s enough to come up with a short program of length n that’s substantially less than |D|. The number n will then form an upper bound for K(D). In practice, we use n as an estimate for K(D). Such an estimate, as we’ll see, ends up in applications being a conservative estimate of Kolmogorov complexity.

Next: “Specified Complexity as a Unified Information Measure.”

Editor’s note: This article appeared originally at BillDembski.com.