Neuroscience & Mind

Neuroscience & Mind

Kissinger and Girard on Artificial Intelligence

As Wesley Smith has already noted, Henry Kissinger has a superb reflection in The Atlantic on artificial intelligence and the dangers it poses to our civilization. I think Kissinger is mostly right, and his observations should be discussed and explored.

His title and subtitle sum it up:

How the Enlightenment Ends

Philosophically, intellectually — in every way — human society is unprepared for the rise of artificial intelligence.

He asks:

What would be the impact on history of self-learning machines—machines that acquired knowledge by processes particular to themselves, and applied that knowledge to ends for which there may be no category of human understanding? Would these machines learn to communicate with one another? How would choices be made among emerging options? Was it possible that human history might go the way of the Incas, faced with a Spanish culture incomprehensible and even awe-inspiring to them? Were we at the edge of a new phase of human history?

Good question, although I caution against taking the term “self-learning machines” literally. Machines don’t learn. Machines don’t have minds, and can never have minds. People learn. People use machines — artifacts like written words, books, and computers — to represent ideas, and the artifacts themselves influence the way these ideas are expressed and conveyed. The medium is part of the message. But the artifacts are not thinking. People are thinking, using artifacts like artificial intelligence as the medium by which human thoughts are expressed. We often don’t understand how the medium leverages our thoughts. But it does, and often powerfully.

An example would be the printing press. When Gutenberg published his Bible, he no doubt intended it to spread the Gospel to the common people of Europe. And that he did. But the dissemination of the Bible in the vernacular to the masses had a profound impact on European civilization. It opened to the common man the personal reading of scriptures, and thus to the radical ideas of Hus and Wycliff and to the Reformation. The result was a cultural hurricane, culminating in the Thirty Years’ War, which depopulated much of northern Europe.

Yet the printing press didn’t think, just as artificial intelligence doesn’t think. Metal and paper and silicon don’t have ideas. They represent ideas, and their representation can be very powerful and subtle and have consequences far beyond the intentions of the people who express their ideas through them.

Kissinger understands this.

Ultimately, the term artificial intelligence may be a misnomer. To be sure, these machines can solve complex, seemingly abstract problems that had previously yielded only to human cognition. But what they do uniquely is not thinking as heretofore conceived and experienced. Rather, it is unprecedented memorization and computation. Because of its inherent superiority in these fields, AI is likely to win any game assigned to it. But for our purposes as humans, the games are not only about winning; they are about thinking. By treating a mathematical process as if it were a thought process, and either trying to mimic that process ourselves or merely accepting the results, we are in danger of losing the capacity that has been the essence of human cognition.

AI is so powerful, not because machines can think, but because machines leverage human thought, and often do so in ways we do not predict or understand. Kissinger rightly calls for a deliberate effort to understand the way AI will transform us. He hints at the salient issue here:

The impact of internet technology on politics is particularly pronounced. The ability to target micro-groups has broken up the previous consensus on priorities by permitting a focus on specialized purposes or grievances. Political leaders, overwhelmed by niche pressures, are deprived of time to think or reflect on context, contracting the space available for them to develop vision… [t]he digital world’s emphasis on speed inhibits reflection; its incentive empowers the radical over the thoughtful; its values are shaped by subgroup consensus, not by introspection. For all its achievements, it runs the risk of turning on itself as its impositions overwhelm its conveniences. [Emphasis added.]

AI’s greatest threat to our civilization is its leveraging of contagion. Ideas spread via the Internet faster—almost instantaneously — than we can reflect on them. We see it already, in the rapid destruction of the reputations and livelihoods of people who run afoul of the internet mob. This is just the beginning; targeting and destruction of individuals and ideas via Internet contagion is in its infancy. It will grow.

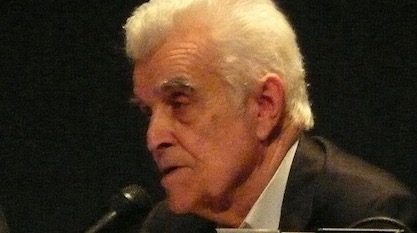

One man understood this contagion in a remarkably profound way. René Girard was a 20th century literary scholar and philosopher who studied the mimetic contagion of desires and the destruction (and, ironically, the cohesiveness) it fosters. He believed that mimetic contagion and our methods to control it are the cornerstone of religion and civilization. Kissinger has rightly and perceptively raised the important questions about AI and our civilization. Girard answers them.

This is no place to go deeply into Girard’s insights — they are profound and complex. But any genuine understanding of the impact AI will have (and is having) on humanity must begin with René Girard.

Photo: René Girard in 2007, by Vicq [Public domain], via Wikimedia Commons.