Intelligent Design

Intelligent Design

Neuroscience & Mind

Neuroscience & Mind

Trends in Philosophy of Science: What Does “Semantic Information” Mean?

“Information” has become a trendy word in the philosophy of science. Case in point: The phrase “semantic information,” which materialists now seek to extend to encompass Claude Shannon’s syntactical information, inserting the concept of meaning into communications between entities. To speak of semantic information sounds redundant, like “visual sight,” but in fact it points to alternative positions about information theory. Robert Marks and William Dembski “mean” something different by “semantic information,” which avoids the trap of self-refutation inherent in methodological naturalism.

A few years back, as reported by Evolution News, David Wolpert of the Santa Fe Institute was warming up to the concept of information flow within the physical universe. Wolpert and William Macready are best known for the “No Free Lunch” (NFL) theorems they proved in the 1990s. Basically, they showed that no search algorithm, including evolutionary algorithms, is superior to blind search. Dembski extended the NFL theorems in his book No Free Lunch (2002) to argue for a law of Conservation of Information. He showed that blind search cannot reasonably be expected to find a specified, complex target without auxiliary information from outside the system. One cannot speed up a search for the ace of clubs in a set of 52 cards turned upside down, for instance, without learning a rule like, “A club is always to the right of a heart.” That rule must be supplied externally to improve the search over random (blind) search. Notice the similarity to Gödel’s incompleteness theorems, which say that a mathematical system cannot prove itself with its own resources.

Evolving Wolpert

With three more years to think about information, what has Wolpert come up with? News from the Santa Fe Institute announces, “New definition returns meaning to information.” Assisted by colleague Artemy Kolchinsky, David Wolpert wrote an article for the Royal Society journal Interface Focus, “Semantic information, autonomous agency and non-equilibrium statistical physics.” They hope to alleviate a deficiency in Shannon information theory, which dealt only with the structure of a communication (i.e., its syntax), not its semantics.

The researchers’ definition fills a hole in information theory left by Claude Shannon, who intentionally omitted the issue of the “meaning” of information in his iconic paper that created the field, “A Mathematical Theory of Communication,” in 1948.

Here is the definition they came up with, followed by an example:

Taking cues from statistical physics and information theory, they’ve come up with a definition that emphasizes how a particular piece of information contributes to the ability of a physical system to perpetuate itself — which in the context of common biological organisms means its ability to survive. Semantic information, they write, is “the information that a physical system has about its environment that is causally necessary for the system to maintain its own existence over time.”

For example, the location of food is semantic information to the Great Barrier Reef fish because it’s essential for the fish’s survival. But the sound of a distant ship does not contribute to the fish’s viability, so it does not qualify as semantic information. [Emphasis added.]

With this definition, they want a theory able to encompass every interaction in biology and even physics. They even see information flow in rocks and hurricanes! To them, their definition helps “sort the wheat from the chaff when trying to make sense of the information a physical system has about its environment.” Any system, physical or biological, that sustains its existence has semantic information, according to this view. Notice it need not be symbolic.

In the realm of biology, understanding the role of semantic information could help answer some of the discipline’s most intriguing questions, such as how the earliest life forms evolved, or how existing ones adapt, says Kolchinsky. “When we talk about fitness and adaptation, does semantic information increase over evolutionary time? Do organisms get better at picking up information that’s meaningful to them?”

But can semantics really be “meaningful” in a realm of non-conscious entities?

Inadequate Literature Search

The paper and the news item from the Santa Fe Institute seem oblivious of the fact intelligent design scientists have researched and written about the concept of “semantic information” for years. Here is a partial list:

2002: William Dembski discussed semantic information in No Free Lunch.

2014: Dembski covered semantic information in his book Being as Communion, which defends the philosophical view that information, not matter, is the fundamental aspect of reality. See this short video about the definition of information that allows mathematical analysis.

2015: An ID the Future podcast introduced a “Taxonomy of Information,” continued here and here. The discussions specifically distinguished semantic information from other kinds of information.

2017: David Snoke discussed whether information is a physical thing, and continued his discussion here. He relates information and entropy.

2017: Brian Miller defended semantic information from an anti-ID critique.

2018: Mike Keas and Eric Anderson denied that information resides within physical systems, like Saturn’s rings. “Information In” must be distinguished from “Information About,” they explain. The discussion continued here.

2018: Robert Marks of the Walter Bradley Center discussed Shannon information, and how meaningful information is distinguished from it by specified complexity, which is measurable. Marks and Dembski are co-founders of the Evolutionary Informatics Lab that has researched these issues for years.

A Bridge Too Far

These ID scientists freely quote the works of scientific materialists, but the favor is rarely reciprocated. It may still be a bridge too far to expect materialists to grapple with ID proponents’ original contributions to the field of informatics. Their resistance might result from the danger that a serious engagement with ID arguments might force them to accept conclusions that would contradict their philosophical assumptions. Aside from that, the materialist view is unsustainable on its own, because it falls prey to self-refutation.

Consider Kolchinsky and Wolpert’s definition again: “information that a physical system has about its environment that is causally necessary for the system to maintain its own existence over time.” What does that imply about their own theory? (1) It is a physical system, not an intellectual one. (2) It is part of an environment that maintains its own existence over time. Accordingly, their “new” definition of semantic information undermines the semantics of their own paper. Why? Because it excludes originality, logic, and truth. They’re just trying to perpetuate their existence over time.

Meaning (semantics) requires a thinking agent. Only conscious moral beings recognize meaning. Take another look at the fish example, where information about a food source helps it maintain its existence, but the sound of a distant passing ship does not. Unless Wolpert and Kolchinsky are willing to attribute thought processes to the fish, which they describe as a “physical system” (not a decision-making free moral agent), the fish is merely responding to the environment instinctively, not determining “meaning” from it. Much less does a hurricane or some other self-organizing system like Saturn’s rings know anything about meaning. In Wolpert’s conceptualization, semantics goes down the drain with the vortex in the sink. The definition is so all-encompassing as to include black holes and inertia, yet so limiting as to eliminate human creativity from possessing semantic content.

Clarity Breaks In

Clarity breaks in like sunshine on “semantic information” when free, moral, rational agency is not ruled out by arbitrary rules of methodological naturalism. An intelligent agent can program fish to respond to food sources or robots to seek out power outlets to recharge themselves. The key point is that the difference between stimuli from a food source and a ship are not distinguished by how some nebulous selective pressure acts from the outside on the organism. Instead, the difference resides in the organism’s intelligently engineered sensors and preprogrammed responses.

Intelligent agents can create complex works, including symphonies and spaceships. Intelligent agents can write papers using reason and logic. Let’s locate causation where it belongs, in the programming by a mind, not in the undirected physical system. Put semantics where it belongs, not in a food source, but in the mind of a thinking being.

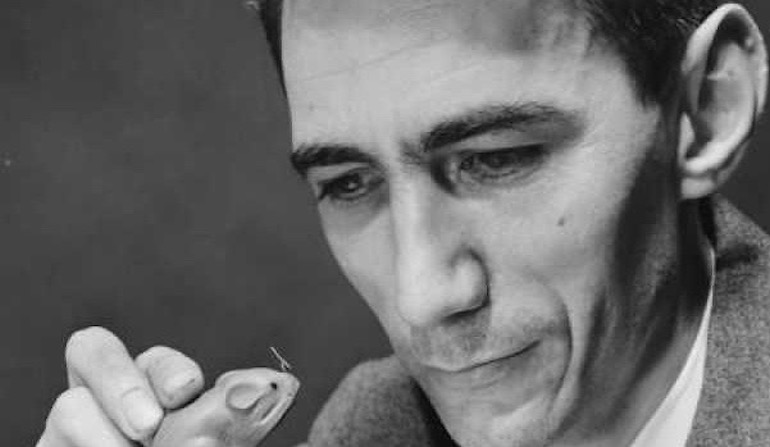

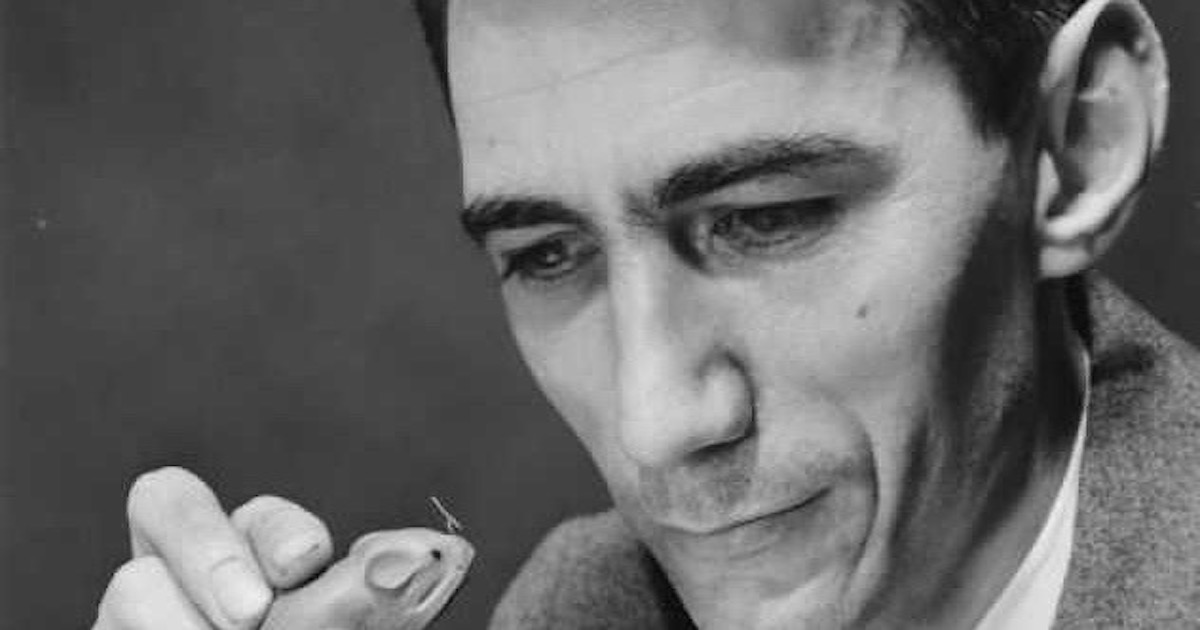

Photo: Claude Shannon, by DobriZheglov [CC BY-SA 4.0], via Wikimedia Commons.