Education

Education

Intelligent Design

Intelligent Design

Leveraging the Design Inference for Effectively Falsifying Data

It seems that not a day passes but an unfortunate academic researcher is caught falsifying data. Recently, Nature published a detailed open-access analysis of scientific misconduct about University of Rochester physicist Ranga Dias. The headline reads: “Exclusive: official investigation reveals how superconductivity physicist faked blockbuster results.” The article remarks, “Ranga Dias was once a rising star in the field of superconductivity research.” Note the past tense. The rest of the article is devoted to the full discrediting of him and his research.

As I read this Nature article, I thought about how Dias’s ruined reputation was so unnecessary. If only Dias had been more skilled at falsifying data. Dias foolishly helped himself to previous data he had collected and then merely recycled it without passing it through an anti-design-inference filter. Intelligence agencies know all about “agent detection evasion.” Most academics don’t. Thus Dias didn’t even try to cover his tracks. Nature has the smoking gun that cooked his goose. See the image there under the heading “Odd Similarity.”

The problem with the two graphs is that if you are legitimately doing separate experiments, and especially with different substances (manganese disulfide on the left, germanium tetraselenide on the right), idiosyncrasies in the data should not be replicated in the graphs. The identical little jog in these data at a temperature of 50 degrees Kelvin is a giveaway. Scientists with their heads screwed on straight don’t believe that such coincidences are mere coincidences.

A Final Examination

There’s a joke about two college students taking their final examination side by side. The professor regarded one as the brightest, the other as the dimmest in the class. However, their completed exams revealed an unexpected similarity: both accurately answered all questions except the last one. That similarity was suspicious, but there was no evident plagiarism in the answers and no evident stray eyes from one exam to the other.

Yet on the last question (an extra-credit question), the brightest student admitted, “I don’t know the answer to this question.” Mirroring this admission, the dimmest student added a revealing word, stating, “I don’t know the answer to this question, either.” Any uncertainty over who might have been copying from whom was effectively dispelled by the telling inclusion of “either.”

Whenever I see data falsifiers caught flatfooted like Dias, it’s as though they’re the dim student in this joke foolishly adding the word “either.” That addition can only incriminate them. I ask myself how often are data falsifiers going to be this careless. But then I have to remind myself that they’ve not been properly trained. They’ve been trained to be honest researchers and to assume that the peers who read and evaluate their work will give them the benefit of the doubt and only raise doubts when the evidence of dishonesty becomes overwhelming.

And so the academy raises generation after generation of inept data falsifiers who embrace the foolish naiveté that their shenanigans are immune to discovery. Indeed, how many academic careers have foundered because of this naiveté? Sure, most of the time simply recycling already used data won’t get you into trouble. But as your career takes off and the importance of your discoveries (or fabrications) get increasingly publicized, your work will come under increasing scrutiny. The case of Ranga Dias is only the most recent. In the last year, the biggest case of data falsification by far was the one that brought down Stanford president Marc Tessier-Lavigne.

His Endearing Habit

As with Dias, Tessier-Lavigne had the endearing habit of simply taking graphical representations of data and moving them unedited among separate experiments and research papers. He thus didn’t even trying to mask his intent as he might if he had at least added some slight random modifications to his data. It took the prestigious Chicago law firm of Kirkland & Ellis and an entire panel of scientific luminaries, along with countless hours of billed legal fees, to draw up a 100-plus page report detailing the irregularities in Tessier-Lavigne’s research. As in the Dias case, there was a smoking gun in the form of identical diagrams from two separate experiments. See page A-54 of the Kirkland & Ellis report.

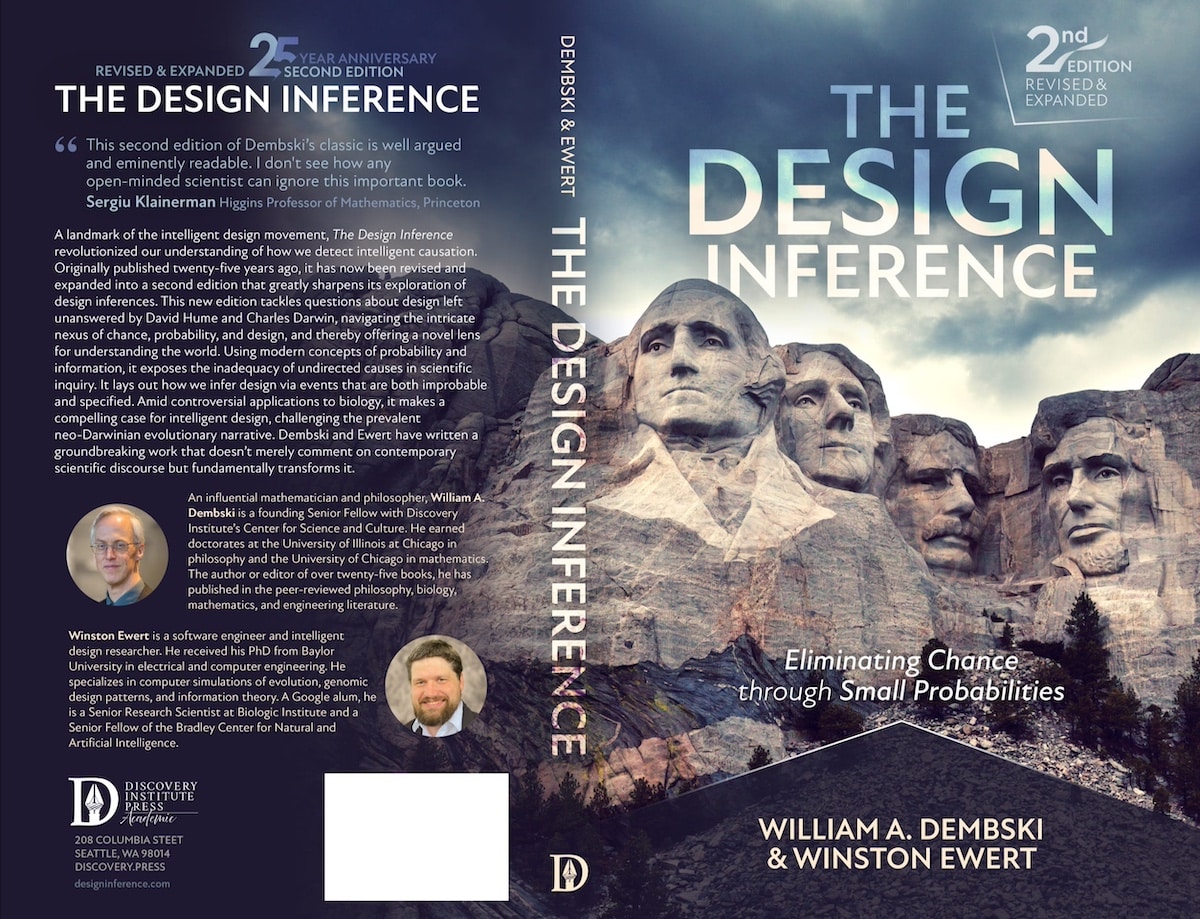

That’s quite the coincidence. Indeed, the images are indistinguishable. In case you missed the importance of the caption there, let me give it to you again: “It is not clear how the single image could be a representative control image reported for both figures given the different experimentation and samples reported.” Actually, what’s not clear is why Stanford needed to pay a prestigious Chicago law firm millions of dollars to draw a design inference implicating data falsification in Tessier-Lavigne’s research. They could simply have read my book The Design Inference and applied its method of design detection to such coincident images. Or even more simply, they could have read and given credence to Stanford freshman Theo Baker’s string of 2022–23 articles in The Stanford Daily pointing out such problems in Tessier-Lavigne’s research:

Enough said. The problem of unskilled data falsifiers in the academy needs to be redressed. To that end, I’ve put together a ten-week course titled “Mastering Data Falsification: Leveraging the Design Inference for Effectively Falsifying Data.” For students who take the entire course, do all the assignments, and fulfill the practicum at the end, I offer a micro-certificate in effective data falsification.

In addition to this course, I also offer consulting services. I use a sliding scale based on where you are in your academic career. I also charge less to would-be data falsifiers who get up to speed on the methods of this course before they actually begin serious data falsifying. By contrast, I charge more to established data falsifiers who have not used the methods of this course to mask their data falsification and who therefore often require more intensive and agonizing help to extricate themselves from the messes they’ve created.

No Guarantees

Caveat: I offer no guarantees. The methods to assist data falsification covered in this course are tried and true — they’ve been tested and they work. But if you are caught for data falsification after taking this course, that’s on you. My presumption in that case is that you did not adequately implement the techniques covered in this course. Therefore, I will not refund your tuition, to say nothing of helping with your legal expenses in case it comes to that.

Here’s a description of the course:

Course Name: Mastering Data Falsification — Leveraging the Design Inference for Effectively Falsifying Data

Instructor: Bill Dembski

Format: Online

Quarter: Fall 2024, precise dates and times to be determined

Grading: 50 percent practicum at the end, 50 percent class assignments

Main Text:

- The Design Inference: Eliminating Chance Through Small Probabilities, 2nd edition (2023), William A. Dembski and Winston Ewert.

Additional Required Texts:

- Plastic Fantastic: How the Biggest Fraud in Physics Shook the Scientific World (2009), Eugenie Samuel Reich. [The case of J. Hendrik Schön.]

- No One Would Listen: A True Financial Thriller (2010), Harry Markopolos. [The case of Bernard Madoff.]

- “Randomness by Design” (1991), William A. Dembski.

- Data Hiding and Its Applications: Digital Watermarking and Steganography (2022), eds. David Megías et al.

- Serious Cryptography: A Practical Introduction to Modern Encryption (2017), Jean-Philippe Aumasson.

Background Text:

- Elements of Information Theory, 2nd edition (2006), Thomas M. Cover and Joy A. Thomas.

Week by Week

WEEK 1: Data falsification as a force multiplier. In the competitive world of higher education, the pressure to publish groundbreaking research and achieve recognition can be immense. Data falsification can lead to a rapid increase in publications, citations, and professional recognition, acting as a catalyst for career advancement. If a data falsifier can make up results that are at once convincing and momentous, the path may even be cleared to academia’s highest accolades (can you say “Nobel”?). This week we quantify the potential benefits of data falsification. But benefits always need to be balanced against costs: there is a two-edged sword here if you are caught.

WEEK 2: History of data falsification. Unfortunately, most of what we know about data falsification comes from people who got caught. That in some ways is heartening because it suggests that the vast majority of data falsification may already go undetected, though design-inferential methods always raise the specter of these cold cases eventually coming to light. In any case, it’s worth having some historical perspective on the topic. Thus we review pre-digital data falsification, such as the Piltdown Man fraud, which happily for the perpetrators was not discovered till they were long gone. Nonetheless, the focus of this week is on the modern era of digital data falsification. Here the case of J. Hendrik Schön (2002) is exemplary for its boldness and ingenuity.

WEEK 3: Leveraging other forms of academic misconduct. Data falsification is great as far as it goes, but it works best in concert with other forms of academic misconduct. Plagiarism, broadly construed, involves stealing results — and not just words — from others. If these can be made to seem properly one’s own, they can rapidly increase one’s publication record and reputation. Combine plagiarism with a nuanced form of data falsification that effectively masks the making up of data, and you have a killer combination. Facility with plagiarism and adeptness at data falsification constitute hard skills. Other hard skills that can make academic misconduct yield advantage include effective mining of generative AI. But to truly make academic misconduct pay, one also needs soft skills for engineering consent. This week details the dividends you can expect from various forms of academic misconduct — provided you’re not caught.

WEEK 4: Probability, Information, and Randomness. With the stage setting for this course now behind us, we delve into the nuts and bolts of design inferences. This week we develop the basics of probability and information theory needed to understand design inferences. We also include an in-depth study of randomness. The theme of randomness is especially important in effective data falsification because data falsification so frequently runs aground on unduly obvious coincidences between supposedly divergent data sets. The proper use of randomness in removing prima facie evidence of copying among such data sets is an extremely important technique to master in becoming a master data falsifier.

WEEK 5: Triangulating on design through small probability and specification. This week we focus on the twin pillars of the design inference: specification and small probability. For an event to implicate design, it needs to have small probability. Events that are highly probable are bound to happen on their own. Even so, small probability is not enough to implicate design. Plenty of small probability events happen all the time (just get out a coin and start tossing it, and before you know it you’ll be participating in a highly improbable event). In addition to small probability you need an event to match an independently given pattern, or what we call a specification. How these twin pillars of the design inference are properly defined and work together is the topic of this week.

WEEK 6: Case studies of design inferences. With the theoretical underpinnings of design inferences out of the way, we now run to a series of case studies showing the power and scope of design inferences. Thus we show how design inferences apply to intellectual property protection, forensic science, cryptography, biological origins, archeology, the search for extraterrestrial intelligence (SETI), and directed panspermia. Lastly, we show how design inferences apply to data falsification in general (not just academic but also financial and otherwise), underscoring the traps and pitfalls that data falsifiers face when they try to avoid the spotlight that design-inferential methods would shine on their fraud.

WEEK 7: Cryptography and digital data embedding technologies. To prevent data falsification and other types of fraud, institutions are increasingly making use of cryptography and digital data embedding technologies. Experimental protocols, for instance, might be put on a blockchain that requires cryptographic methods to prevent tampering of data. Digital data embedding technologies, such as steganography and watermarking, may insert layers of information into digital data that may be hard to remove and can provide strong evidence of provenance as well as tampering. Successful data falsifiers need to be aware of these techniques. Interestingly, the challenge of all these techniques ultimately comes down to the power of design inferences in uncovering attempts to evade them. In any case, forewarned is forearmed.

WEEK 8: Damage control for poor data falsification. What should you do if you’ve been falsifying data right along without the knowledge of this course? What if you’ve committed cases of data fraud as ill-conceived and blatant as those of Ranga Dias or Marc Tessier-Lavigne? Provided your fraud is undiscovered, there’s still hope. The most important thing is to get your hands on the experimental protocols and then carefully massage the data in them to cover your tracks. Not surprisingly, the right way to massage the data will derive from the design inference. Besides adeptly falsifying experimental protocols, you’ll also want to start issuing errata, corrections, and diagram substitutions to remove evidence of blatant fraud and indicate your good faith efforts at academic integrity. And even if so far you have been a successful data falsifier, what you learn this week will help get your ducks in a row in case you ever are charged with data falsification.

WEEK 9: Special topics. The benefits of data falsification never grow old, so this course will be of perennial interest. Nonetheless, times change and topics requiring treatment will vary. In this week we try to stay on top of the latest trends relevant to data falsification. Generative AI is much in the news lately and impacting all aspects of academic life. Thus in the present course, we examine how to exploit generative AI, especially in rewriting text to avoid plagiarism as well as in producing novel data sets for research papers. We also return to the theme of using randomness to transform data and make it unique from one research project to the next, thereby evading agency detection. Additionally, we consider that data falsification applies widely outside of academic research, particularly in the financial services industry, showing that design-inferential methods apply there both in detecting fraud and, given proper precautions, in effectively masking it. We consider especially the Ponzi scheme of Bernard Madoff and show how he could have better protected himself from scrutiny.

WEEK 10: Practicum: Proof of concept. It can be done. You can do it! This last week will be devoted to writing a research paper based entirely on falsified data and getting it accepted in some widely respected forum such as an academic journal, conference, or platform (e.g., arXiv.org). Because students are presumed new, or at least less than fully adept, at data falsification, students are urged to adopt a pen name in submitting such papers. The papers are to be written with full attention to the techniques and recommendations made in this course. In addition to the research papers, students need to write a meta paper that explains how they are using what they learned in the course to write their research paper. Half the grade for the course will be based on this paper and meta paper (the other half being based on weekly assignments), and must make clear how the lessons of this course have been internalized. Extra credit will be given for papers that make a big splash, being widely cited and praised for their “originality” and “insight.”

For more information about this course and my consulting services, use the contact page on my blog BillDembski.com. I look forward to hearing from you and helping you use data falsification as an effective tool to advance you career!

…

wait for it

wait for it

wait for it

wait for it

wait for it

wait for it

wait for it

wait for it

wait for it

wait for it

wait for it

wait for it

…

Disclaimer: This course description on how to become an effective data falsifier is, of course, a parody. Because it is a parody, I’m clearly NOT encouraging unethical or illegal behavior by the use of design-inferential methods. If anything, design-inferential methods are pivotal to restraining data falsification, and will prove increasingly important as data falsifiers become more devious at attempting to cover their tracks.

Cross-posted at Bill Dembski on Substack.